The Next Big Lift?

Tim Bourgaize Murray explores opportunities for brokers to take on more of buy-side firms' data management burden.

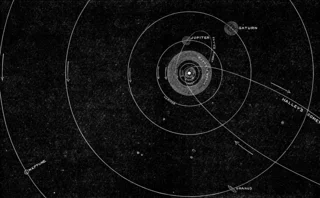

Your phone provider tracks your location. The fridge can ping you when you’re low on milk. Your watch asks you to take a minute and breathe. Cars will soon drive us around by themselves. Yes, the Internet of Things requires massive leaps of innovation in 2017, but at its core there’s still a human choice—to augment the relationship we’ve once had with these machines, and the companies that build them. The reasons aren’t hard to see: cheaper, easier, healthier all come to mind. Automatic. And

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@waterstechnology.com

More on Emerging Technologies

Waters Wavelength Ep. 313: FIS Global’s Jon Hodges

This week, Jon Hodges, head of trading and asset services for Apac at FIS Global, joins the podcast to talk about how firms in Asia-Pacific approach AI and data.

Project Condor: Inside the data exercise expanding Man Group’s universe

Voice of the CTO: The investment management firm is strategically restructuring its data and trading architecture.

BNP Paribas explores GenAI for securities services business

The bank recently released a new web app for its client portal to modernize its tech stack.

Bank of America and AI, exchanges feud with researchers, a potential EU tax on US tech, and more

The Waters Cooler: Broadridge settles repos in real time, Market Structure Partners strikes back at European exchanges, and a scandal unfolds in Boston in this week’s news roundup.

Bloomberg rolls out GenAI-powered Document Insights

The data giant’s newest generative AI tool allows analysts to query documents using a natural-language interface.

Tape bids, algorithmic trading, tariffs fallout and more

The Waters Cooler: Bloomberg integrates events data, SimCorp and TSImagine help out asset managers, and Big xyt makes good on its consolidated tape bid in this week’s news roundup.

DeepSeek success spurs banks to consider do-it-yourself AI

Chinese LLM resets price tag for in-house systems—and could also nudge banks towards open-source models.

Standard Chartered goes from spectator to player in digital asset game

The bank’s digital assets custody offering is underpinned by an open API and modular infrastructure, allowing it to potentially add a secondary back-end system provider.